1. Memory Capping

SQL Server is an application that uses as much memory as is provided to it. Setting memory too high can cause other applications to compete with SQL on the OS and setting memory too low can cause serious problems like memory pressure and performance issues. It is important to provide at least 4 GB memory for OS use, if you are running only SQL Server as an application on the server.

2. Data File Locations

SQL Server accesses data and log files with very different I/O patterns. Data file access is mostly random whilst transaction log file access is sequential. Spinning disk storage requires re-positioning of the disk head for random read and write access. Sequential data is therefore more efficient than random data access. Separating files that have different access patterns helps to minimize disk head movements, and thus optimizes storage performance.

3. Auto growth

Auto-growth of the database files should be set in MBs as it will allocate that much chunk of space at one time on disk. If the large database was based on the percentage then, it will take more space that is required to handle the growth. This causes these large database files to be over allocated from the disk space.

Auto-growth of the database files is recommended to be set to some specific no. depending upon the growth in your database because setting up the growth to unlimited causes the database to outgrow the disk space and causing disk space issues or worst-case database could go offline.

The auto growth option should be changed to a digit calculated so that the database files do not fill up all the available space and does not runs out of space on the database as well.

4. TempDB Configuration

Beginning with SQL server 2016, the number of CPU cores visible to the operating system is automatically detected during installation, and based on that number, SQL calculates and configures the number of Tempdb files required for optimum performance.

One other thing worth looking at in relation to tempdb is Trace Flag 1118 (Full Extents Only)

With Trace Flag 1118 enabled each newly allocated object in every database on the instance gets its own private 64KB of data. The impact is greatest in tempdb where most objects are created. Up to SQL Server 2014 we had Trace Flag 1118 which is used to allocate a Uniform Extent instead of Mixed Extents to minimize contention in extent allocation. If this trace flag is enabled, then the first 8 data pages for tables were also Uniform Extents rather than Mixed Extents.

In SQL Server 2016, this uniform extent allocation is the default behavior and we can change this behavior if required by using an ALTER DATABASE command, however we cannot change the behavior for the TempDB database.

5. Minimum Memory Per Query

This option specifies the minimum amount of memory (in kilobytes) that will be allocated for the execution of a query. This is also known as the minimum memory grant. The default value is 1,024 KB.

6. CPU Allocation

The execution of the query depends on the no. of joins, no. of functions or other parameters being used in the query. This setting where the resource i.e. CPU will be assigned to the SQL Server requests is important. If the check box is tick, SQL Server will automatically take care of the CPU that will be assigned for each execution.

7. MAX DOP

When an instance of SQL Server runs on a computer that has more than one microprocessor or CPU, it detects the best degree of parallelism, that is, the number of processors employed to run a single statement, for each parallel plan execution. You can use the max degree of parallelism option to limit the number of processors to use in parallel plan execution. To enable the server to determine the maximum degree of parallelism, this option is set to 0.

8. Isolation Level

What this means is that the user will always be reading the data which is committed or final. Thereby avoiding the confusion whether the data is correct or not which is read by the user. This setting can be changed as per requirement of business.

9. Recovery Models & Backups

It is important that if the database has been kept in full recovery model, log backup is configured for the DB. Log backup configuration ensures that the database can be recovered back at the point in time and the data is not lost.

10. Fill Factor

The fill-factor option is provided for fine-tuning index data storage and performance. When an index is created or rebuilt, the fill-factor value determines the percentage of space on each leaf-level page to be filled with data, reserving the remainder on each page as free space for future growth. The fill-factor value is a percentage from 1 to 100, and the server-wide default is 0 which means that the leaf-level pages are filled as per the capacity available.

11. Remote Query Timeout

The remote query timeout option specifies how long, in seconds, a remote operation can take before SQL Server times out. The default value for this option is 600, which allows a 10-minute wait. This value applies to an outgoing connection initiated by the Database Engine as a remote query. This value has no effect on queries received by the Database Engine.

12. Lock Pages in Memory

This Windows policy determines which accounts can use a process to keep data in physical memory, preventing the system from paging the data to virtual memory on disk. This is really needed when you need to preserve SQL Server working set, which is necessary when the same Windows machine hosts other services or applications that may end up competing with SQL Server for RAM. If the Dev environment hosts any other application that is memory extensive, in that case we can add SQL Server Service account here so that it boosts the performance.

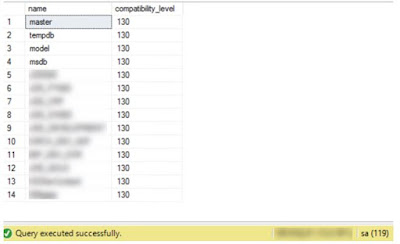

13. Database Compatibility Level

DB compatibility level should be set to highest possible supported by the SQL Server installed. If SQL Server version installed is 2016, compatibility level of the database should be set to 130. It is important to change compatibility level for future since newer versions of SQL Server might not support the previous version of database development.

Compatibility level of all the databases should be changed to the latest one if code is compatible with the higher one. We need to check with the application team before making this change. If application team is fine with upgrading the compatibility of the databases, we can change it to the latest one as per the SQL Server version.

14. Backup Compression

Backup compression is an instance level option that helps in compressing the backups when taken on disk. This is not a mandatory option, but it can really help you in saving disk space while taking a disk backup. The compressed backup is usually 10 times smaller than normal full backup. If the option is enabled on the instance level, whenever you will be taking backup you don’t have to explicitly specify compression option. We should enable the compress backup option on the instance to save disk space in future.

15. SQL Server Logs

SQL Server Error Log files contain informational messages, warnings, critical server events, auditing information etc. And this information in the log file is very critical for analyzing any SQL Server issues. If proper steps towards the maintenance of the SQL Server Error Logs is not taken, then the error log file size may grow very large making it time consuming and difficult to analyze the issues. And, if proper maintenance is not taken care we lose the history of the error logs. So, it’s important to configure errorlog nos. just enough to analyze issue and to limit its size so that it does not grows out to fill up the disk. It’s always good to maintain 99 errorlogs, with 10 MB max size which gets recycled every day. We can set the no. of logs before recycling to 99 and limited the log size to max. 10 MB.

For limiting the size of each log file being created, we need to either run the query as mentioned in the screenshot below or make changes in the registry for ErrorLogSizeinKb parameter.

16. Dedicated Administrator Connection

DAC is a diagnostic connection that is designed to allow an administrator to troubleshoot SQL Server when the instance is having problems. This is a special type of connection which for example bypasses logon triggers. Only members of the sysadmin role can connect using the DAC. You can have only one active admin connection at a time. We should enable DAC for the instance so that in case of issues, we can troubleshoot the SQL problems.

17. Service Pack and Cumulative Update

It is important that SQL Server should be patched with latest Service Pack and n-1 Cumulative Update so that it is always up to date. The compatibility of the new build nos. can be checked with application and if the application is compatible with the latest versions, SQL must be upgraded. The build no. and the SP can we checked using the screen capture below:

18. Guest Users

It is recommended to disable guest user in every database as a best practice for securing the SQL Server. Guest user permits access to a database for any logins that are not mapped to a specific database user. The guest user cannot be dropped but it can be disabled by revoking the CONNECT permission.

19. Isolating Services

Isolating services reduces the risk that one compromised service could be used to compromise others. To isolate services run separate SQL Server services under separate Windows accounts. Whenever possible, use separate, low-rights Windows or Local user accounts for each SQL Server service.

20. Service Start Mode

SQL Server Database engine services should always be in automatic start mode so that whenever the system comes up, it gets started automatically. SQL Server Agent is dependent on SQL Server Service. So, when the database engine comes up, Agent will also try to come up. SQL Server Browser service is usually in disabled state on the servers where we have just one database instance. Browser service allows incoming client connections to request information on UDP port 1434.

21. C2 Auditing

Selecting this option will configure the server to record both failed and successful attempts to access statements and objects. This information can help you profile system activity and track possible security policy violations. Enabling this option is completely based on business requirement.

22. Network Protocols

If you are running your server application on a local computer which also runs SQL Server, then Shared Memory or Named Pipes protocol is your best choice. However, Named Pipes get loaded in kernel mode of the operating system and are much faster on the local machine. Note: This is only true if both the application and SQL Server are running on the same machine and if not then it would use Network Pipes that can be used over Local Area Networks. If your Local Area Network is fast, both TCP/IP and Named Pipes would perform with the same speed. However, if there are more requests waiting to hit the server then Named Pipes may encounter pipe-busy error when trying to connect to SQL Server whereas, TCP/IP sockets doesn’t experience this problem as it supports backlog queue for any number of requests.

23. Security

There are many aspects of security that should be taken care of when configuring SQL Server. Few of them are listed here such as the SQL Server username and password should not be same. It makes the system exposed to security breaches. The passwords used in the SQL Server login should be 10 characters long, should have alphanumeric characters and special characters. It should follow password policy.

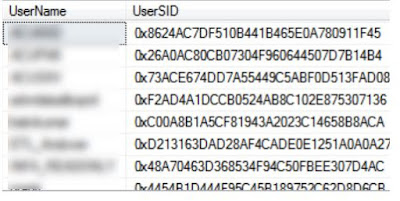

24. Orphaned Users

Most of the time, a database user is linked to a SQL Server login. We do this to tell SQL Server that a SQL login has access to database using the context and permissions of database user. This mapping is stored using a unique identifier called “SID”. This mapping can be “broken” in many ways, that leaves database users without any link to a login. When this happens, we refer to such a database user as an orphaned database user. This issue usually occurs because of the following reasons:

• Dropping a SQL Login using SSMS, even though we understand this will happen as SSMS shows a warning.

• When using mixed authentication mode, restoring a database backup to another SQL Server instance.

• After a database mirroring failover.

To fix this, we can follow two ways:

• Drop them as they are no longer needed

• Link them back to the SQL Server login with the appropriate name

25. Missing Indexes

There are multiple ways in SQL Server through which we can get the information about the missing indexes in SQL databases. The problem with just blindly creating this index is that SQL Server has decided that it is useful for a query (or handful of queries), but completely and unilaterally ignores the rest of the workload. So, before going ahead with creation of the index suggested by SQL Server, you must check with the developer about the queries that are running, creating the indexes will solve any issues or might cause any other troubles.

26. Antivirus Exclusion

Although antivirus software is a very important part of security planning, it is important to understand the effect it has on SQL Server files. After rebooting a server, if the antivirus software locks a SQL Server file before SQL Server can gain access to that file, potential issues ranging from SQL Server not being able access those files to possible database corruption may occur. In several cases, SQL Server refuses to start sometimes or the CPU / IO seems to be stalling sometimes. To avoid such issues, it is good practice to exclude SQL Server files from antivirus scan.

27. Auto-Close, Auto-Shrink, Auto-Update Statistics

Auto Close must be set to off. When AUTO_CLOSE is set ON, it causes performance degradation on heavily used databases by increasing overhead of opening and closing the database after each connection. Additionally, when it is ON, it also flushes (removes) the procedure cache after each connection.

Auto shrinking the database comes with a performance hit. If you know that the space that you are reclaiming will not be needed in the future, you can reclaim the space by manually shrinking the database.

When the automatic update statistics option, AUTO_UPDATE_STATISTICS is ON, the Query Optimizer determines when statistics might be out-of-date and then updates them when they are used by a query. Statistics become out-of-date after insert, update, delete, or merge operations change the data distribution in the table or indexed view. The Query Optimizer determines when statistics might be out-of-date by counting the number of data modifications since the last statistics update and comparing the number of modifications to a threshold. We should keep the async parameter of the auto update statistics to true.

Up to SQL Server 2014, SQL Server uses a threshold based on the percent of rows changed. This is regardless of the number of rows in the table. The thresholds are:

Old threshold: it takes 20% of row changes before auto update stats kicks. For a table with 100 million rows, it requires 20 million row change for auto stats to kick in. For clear majority of large tables, auto stats basically don’t do much.

New threshold: In SQL 2016, this new threshold is enabled by default for a database with compatibility level 130, which causes more frequent update.

In short:

SQL Server 2014 or below: default is the old threshold. You can use trace flag 2371 to activate new threshold

SQL Server 2016: Default is new threshold if database compatibility level is 130. If database compatibility is below 130, old threshold is used (unless you use trace flag 2371)

Statistics updates can be either synchronous (the default) or asynchronous. With synchronous statistics updates, queries always compile and execute with up-to-date statistics; When statistics are out-of-date, the Query Optimizer waits for updated statistics before compiling and executing the query. With asynchronous statistics updates, queries compile with existing statistics even if the existing statistics are out-of-date.

28. Maintenance Jobs

For any SQL Server database engine, it is important to have maintenance solution which takes care of basically servicing the databases regularly. This SQL Server Maintenance Solution comprises of integrity checks, and index and statistics maintenance.

Maintenance tasks should be created in the instance which will take care of integrity checks, index optimization, cleanup files, recycle error logs, checking growth on disk and archiving the database mail.

Jobs to be created on SQL Server:

• Cleanup CommandLog

• Cleanup Job history

• Cleanup Output file

• Daily Errorlog Recycle

• Database Integrity Check

• Database Index Optimization

Comments

Post a Comment